Janus Pro AI

Janus Pro AI: Image Generation

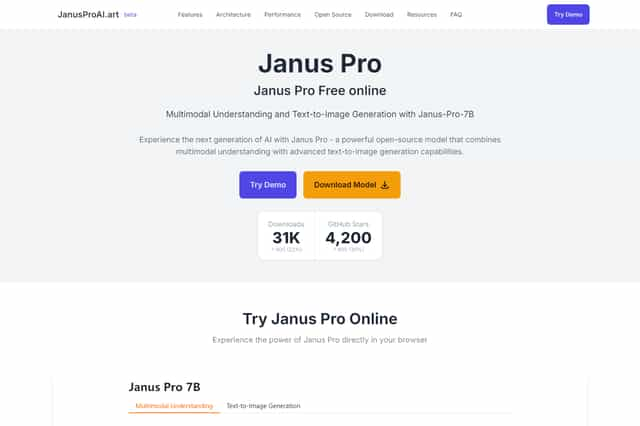

Janus Pro AI, a powerful open-source multimodal AI model, integrates text and image generation. Its 7B parameter base model achieves exceptional performance. Janus Pro AI is MIT licensed, enabling commercial use and community contributions.

Janus Pro AI Introduction

Janus Pro AI is an open-source multimodal AI model, currently boasting over 4,200 GitHub stars and experiencing a 30% growth rate. Its architecture integrates text understanding and image generation capabilities using a unified transformer architecture, a multi-head attention mechanism, a cross-modal fusion layer, and an advanced vision encoder. Key technical innovations within Janus Pro AI include an enhanced cross-attention mechanism, optimized token generation, efficient resource utilization, and a scalable design pattern. The 7B parameter base model features a 4096 context window, supports mixed and distributed training, and has demonstrated impressive performance metrics. Training speed is reported as 2x faster than baseline models, achieving a benchmark score of 95%, a MMBench Multimodal Understanding score of 79.2, and a GenEval Text-to-Image Generation score of 0.80. Janus Pro AI is available under the MIT license, permitting commercial use, modification, and distribution. The model is available in 1.5B and 7B parameter variants and uses the HAI-LLM training framework based on PyTorch. Training a 7B model takes approximately 14 days on a 32-node cluster with 8x A100 GPUs per node. The Janus Pro AI project's most recent update was in January 2025, suggesting continued active development and community support. Janus Pro AI's open-source nature and strong benchmarks suggest a robust and promising platform for various applications.

Janus Pro AI Features

Unified Multimodal Architecture of Janus Pro AI

Janus Pro AI utilizes a unified Transformer architecture, incorporating a multi-head attention mechanism for processing both textual and visual data. A cross-modal fusion layer effectively integrates information from the text and image modalities. The model also features an advanced vision encoder, enhancing its capacity for image understanding and generation. This sophisticated architecture is a key element to Janus Pro AI's capabilities.

Core Technical Innovations in Janus Pro AI

Several core technical innovations underpin Janus Pro AI's performance. An enhanced cross-attention mechanism improves the model's ability to relate textual descriptions to visual features. Optimized token generation contributes to faster and more efficient image synthesis. Efficient resource utilization minimizes computational demands, making Janus Pro AI more accessible. Finally, a scalable design pattern allows for easy adaptation to different hardware and training scenarios. Janus Pro AI's innovative approach distinguishes it.

Model Specifications of Janus Pro AI

Janus Pro AI is available in two parameter variants: 1.5B and 7B. The 7B parameter model boasts a context window of 4096 tokens. It supports mixed precision training, improving training efficiency, and distributed training across multiple GPUs enabling quicker training times. These specifications allow for flexible deployment. Janus Pro AI scales to meet various needs.

Performance Metrics of Janus Pro AI

Janus Pro AI demonstrates significant performance advantages. Training speed is reported as twice as fast compared to established baselines. It achieves a benchmark score of 95%, indicating high accuracy. The MMBench multimodal understanding score is 79.2, showcasing its capabilities in understanding complex relationships between text and images. Finally, the GenEval text-to-image generation score is 0.80, demonstrating good image generation quality. Janus Pro AI's benchmarks signify its high performance.

Open Source Compatibility and Licensing of Janus Pro AI

Janus Pro AI operates under the MIT License, enabling open-source compatibility. Commercial use is permitted, meaning businesses can leverage Janus Pro AI for various applications. Modification and distribution are also allowed. The community can contribute to shaping the ongoing evolution of Janus Pro AI. Its open-source nature fosters collaboration.

Training Infrastructure and Resources for Janus Pro AI

The Janus Pro AI model is trained using the HAI-LLM training framework, based on PyTorch. Multi-node training support is available utilizing up to 8x A100 GPUs per node. Training the 7B parameter model requires approximately 14 days on a 32-node cluster. Janus Pro AI's training infrastructure is robust and scalable. These details show the computational aspects of Janus Pro AI.

Janus Pro AI's Enhanced Cross-Attention Mechanism

The enhanced cross-attention mechanism in Janus Pro AI is a significant improvement over prior models. It allows for a more nuanced understanding of the relationships between text and images, leading to higher-quality image generation and a more accurate understanding of multimodal inputs. This refined mechanism is a key aspect of Janus Pro AI's advanced capabilities and sets it apart. This highlights a core feature of Janus Pro AI.

Janus Pro AI's Optimized Token Generation

Janus Pro AI's optimized token generation process significantly speeds up the image generation process. The improvements contribute to faster training times and reduced computational costs. This efficiency is a testament to the careful engineering and optimization behind Janus Pro AI's architecture. Janus Pro AI's efficient design stands out.

Janus Pro AI's Efficient Resource Utilization

Janus Pro AI is designed with efficient resource utilization in mind. The model’s architecture and training processes are optimized to minimize computational requirements and maximize performance. This is crucial for making the model accessible to a wider range of users and applications. Janus Pro AI’s efficient design is a key consideration.

Janus Pro AI – Scalable Design Pattern

The scalable design pattern of Janus Pro AI enables its deployment across a range of hardware configurations and training environments. This flexibility ensures that Janus Pro AI remains adaptable and useful in various contexts and resource capacities. This scalability is a hallmark of Janus Pro AI’s capabilities.

Janus Pro AI Frequently Asked Questions

Janus Pro AI: Model Architecture Inquiry

What is the underlying architecture of the Janus Pro AI model, and what are its key components such as the type of Transformer architecture employed, the attention mechanism used, and the nature of its cross-modal fusion layer and vision encoder? The report mentions a unified multimodal architecture; can you elaborate on this aspect of Janus Pro AI?

Janus Pro AI: Technical Innovation Deep Dive

What specific technical innovations distinguish Janus Pro AI from other multimodal AI models? The report highlights enhanced cross-attention mechanisms, optimized token generation, efficient resource utilization, and a scalable design pattern. Could you provide more detail on each of these innovations within the Janus Pro AI framework?

Janus Pro AI: Performance Metrics and Benchmarks

What are the key performance metrics of Janus Pro AI, and how does it compare to other existing models? The report mentions training speed, benchmark scores (95%), MMBench Multimodal Understanding Score (79.2), and GenEval Text-to-Image Generation Score (0.80). Can you elaborate on the benchmarks used and the significance of these scores for Janus Pro AI?

Janus Pro AI: Model Specifications and Variants

What are the model specifications for Janus Pro AI, including the number of parameters, context window size, and training support details? The document notes 7B base model parameters and a 4096 context window, along with mixed and distributed training support. Are there different parameter variants available, such as the mentioned 1.5B parameter version?

Janus Pro AI: Deployment, Licensing, and Community Aspects

How can Janus Pro AI be deployed, and what are the licensing terms associated with its use? The report states that Janus Pro AI is open-source under the MIT license and that commercial use is permitted. What support is given to the community and how can contributions to the project be made, given the mention of welcoming community contributions?

Janus Pro AI: Training Infrastructure and Resource Requirements

What type of training infrastructure is required to train the Janus Pro AI model? The report mentions the HAI-LLM training framework, PyTorch, multi-node training support (8x A100 GPUs/node), and a training duration of 14 days on a 32-node cluster for the 7B model. What are the resource requirements for training different variants of Janus Pro AI?

Janus Pro AI: Recent Developments and Future Outlook

What is the recent activity surrounding Janus Pro AI, and what is the outlook for its future development? The report mentions recent updates in January 2025, indicating high project activity. What are the plans for future development of Janus Pro AI based on stated community support and observed activity?