OpenRouter

OpenRouter: AI Models & Tools Platform

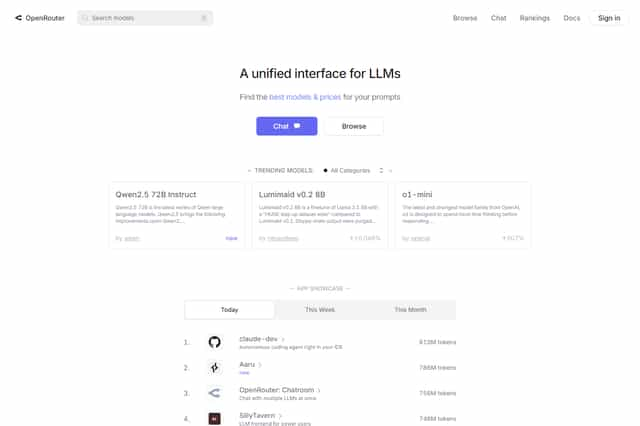

OpenRouter is a platform that provides diverse language models and tools, helping users utilize various advanced language models for chatting, writing, and other tasks. OpenRouter offers a wide range of features, including model routing, streaming output, and tool integration. With its flexible and feature-rich platform, OpenRouter supports a broad range of applications.

OpenRouter Introduction

OpenRouter.ai is a platform that provides a diverse range of language models and tools. It aims to help users leverage these advanced language models for various tasks, such as chatting, creative writing, and much more. OpenRouter.ai's requests and responses are very similar to OpenAI's Chat API, but with some minor differences. Users can customize their requests by specifying parameters like model, messages, tools, and provider. For example, users can choose specific models, such as mistralai/mixtral-8x7b-instruct or openai/gpt-3.5-turbo, and specify the role and content of messages as user, assistant, or system. If the model parameter is not specified, OpenRouter.ai will automatically use the user's or payer's default model. Otherwise, users need to choose a model from the supported model list and include the organization prefix. OpenRouter.ai will select the most cost-effective and best performing GPU to handle the request and automatically switch to other providers or GPUs if the request encounters a 5xx error code or rate limit. OpenRouter.ai also supports Server-Sent Events (SSE) streaming output for all models. To enable stream output, simply add stream: true to the request body. The stream output may include "comment" payloads that should be ignored. If the chosen model doesn't support a certain request parameter (e.g., logit_bias or top_k), that parameter will be ignored, and the rest will be forwarded to the underlying model API. OpenRouter.ai also supports tool calls where users can specify the tools parameter to call specific functions. Tools can be of the function type, containing a function description, name, and parameters. Supported tool types include text content and image content. Users can integrate their applications into OpenRouter.ai's rankings by configuring a reverse proxy server like Caddy or Nginx and setting the necessary headers like HTTP-Referer and X-Title. OpenRouter.ai offers a variety of language models, including Reflection Llama-3.1 70B, Euryale 70B v2.1, and Mistral 7B Instruct v0.2, among others. These models have unique characteristics. For example, Reflection Llama-3.1 70B uses a new Reflection-Tuning technique to improve inference accuracy, while Euryale 70B v2.1 focuses on creative role-playing. Users need to obtain an API key from the OpenRouter.ai platform and include it in their requests. The optional HTTP-Referer and X-Title headers can be used to display application information in OpenRouter.ai's rankings. Users can quickly get started with OpenRouter.ai using a simple API request. Example code demonstrates how to make requests using languages like TypeScript, Python, or Ruby, and integrate with OpenAI's client API. In conclusion, OpenRouter.ai is a flexible and feature-rich platform that supports various language models and tool calls, suitable for a wide range of applications.

OpenRouter Features

Model Routing

The OpenRouter platform intelligently routes requests to the best available language models based on specific needs and constraints. It does so by analyzing available models and leveraging the principles of cost-effectiveness and performance optimization. If users do not specify a particular model through the model param, OpenRouter will use the default model set by the user or the paying party. When a model is designated, the OpenRouter engine will choose the most suitable GPU for processing—in the event of an error code or rate limit, it automatically switches to alternative providers or GPUs.

Model Selection

OpenRouter offers a diverse range of advanced language models, each with its own unique strengths and characteristics. Some of the prominent models include Reflection Llama-3.1 70B, Euryale 70B v2.1, and Mistral 7B Instruct v0.2. Reflection Llama-3.1 70B utilizes a new Reflection-Tuning technique to enhance inference accuracy, while Euryale 70B v2.1 specializes in creative role-playing scenarios. Mistral 7B Instruct v0.2 is an open-source language model that is specifically designed for instruction following.

Stream Output

OpenRouter supports a powerful feature called "Stream Output," which utilizes Server-Sent Events (SSE) to allow for real-time responses. Users can trigger this feature by including the stream: true parameter in their requests. The OpenRouter platform provides a mechanism for handling potential "comment" loads that may arise during streaming and allows them to be ignored.

Tools and Function Calls

OpenRouter enables users to leverage the power of tools and function calls by specifying a tools parameter within their requests. This can include functions that process text, image, or other data formats. The tools parameter allows users to leverage the capabilities of different tools to enhance the functionality of their models.

Reverse Proxy Configuration

OpenRouter can be integrated with leading reverse proxy servers such as Caddy or Nginx. This integration allows for the configuration of these servers to seamlessly route traffic to the OpenRouter platform. Users can leverage the configuration capabilities of these servers to optimize performance and security for their OpenRouter-powered applications by setting headers such as HTTP-Referer and X-Title.

API Key and Configuration

OpenRouter users can obtain API keys through the designated platform, which are used in their API requests. OpenRouter utilizes the HTTP-Referer and X-Title headers, which can be used for ranking applications in the OpenRouter ecosystem. The API key and these headers ensure that applications are integrated with the platform and operate within the designated parameters.

Quick Start

OpenRouter provides a user-friendly experience for developers to seamlessly integrate its capabilities into their projects. For instance, OpenRouter provides code examples for popular languages like TypeScript, Python, and Ruby.

OpenRouter's Commitment to Accessibility and Openness

OpenRouter prioritizes open source language models for maximum accessibility and inclusivity, ensuring that users have flexibility and choice in selecting and using various models and tools. As an open source platform, OpenRouter actively encourages collaborative development and innovation within its community.

OpenRouter: A Robust and Versatile Platform

OpenRouter emerges as a robust and versatile platform that empowers developers and users with a wealth of advanced language models and tools, enabling the development of exciting new applications and experiences. The platform's focus on accessibility and user-friendliness, combined with its diverse range of models and tools, make OpenRouter a powerful solution for various applications.

OpenRouter Frequently Asked Questions

What are the similarities and differences between OpenRouter.ai's request and response format and the OpenAI Chat API?

Although the request and response format of OpenRouter.ai is very similar to the OpenAI Chat API, there are some minor discrepancies.

What are the benefits of using OpenRouter.ai for model routing?

OpenRouter.ai intelligently selects the most cost-effective and high-performing GPU to process your requests. If you encounter any 5xx error codes or rate limitations, OpenRouter.ai will seamlessly switch to other providers or GPUs automatically, ensuring uninterrupted service.

How does OpenRouter.ai handle streaming output?

OpenRouter.ai supports Server-Sent Events (SSE) streaming output for all models. Simply include stream: true in the request body to enable streaming output. You may encounter “comment” payloads in the streaming output; these should be ignored.

What happens to non-standard parameters when using OpenRouter.ai?

If a selected OpenRouter model doesn't support a particular request parameter (e.g., logit_bias or top_k), the parameter is ignored, and the rest are forwarded to the underlying model API.

How does OpenRouter.ai support tool and function calling?

OpenRouter.ai allows you to specify the tools parameter in your request to invoke particular functions, which can be of the function type and contain descriptions, names, and parameters.

How can I configure a reverse proxy server for OpenRouter.ai?

You can use reverse proxy servers like Caddy or Nginx to integrate OpenRouter.ai with your applications. By setting necessary headers (e.g., HTTP-Referer and X-Title), you can incorporate your application into OpenRouter.ai's ranking.

What are some of the language models offered by OpenRouter.ai?

OpenRouter.ai provides various language models, including Reflection Llama-3.1 70B, Euryale 70B v2.1, and Mistral 7B Instruct v0.2. Each model boasts unique capabilities: Reflection Llama-3.1 70B utilizes a new Reflection-Tuning technique for enhanced inference accuracy, while Euryale 70B v2.1 focuses on creative roleplaying.

How do I get started with OpenRouter.ai?

You can get started quickly by using simple API requests. Example code showcases how to make requests using languages such as TypeScript, Python, or Ruby, and integrate with OpenAI's client API.

What are the benefits of using OpenRouter.ai for language model tasks?

OpenRouter.ai provides a flexible and feature-rich platform, offering support for multiple language models and tool calling, making it suitable for diverse application scenarios.